In the present world, it can be said that having a strong online presence is very paramount for any company. One of the best practices that have been known to have a positive impact on web traffic is search engine optimization commonly referred to as SEO. If there is any aspect of SEO that individuals and businesses don’t pay much attention to it is technical SEO. But it offers a great utility when it comes to providing a better page ranking on any search engine or eventually website. This blog post is aimed at explaining the guidelines of technical SEO and how they are useful in enhancing the search engine rankings of your website.

Technical SEO can therefore be described as how the structures of a website are modified to enhance its ability to be ranked highly by a search engine. It is the process of altering the framework of the site, the code that is used in the site, and even the structure of the site such that the search engines in the website can be able to understand crawl, and index. Applying the mentioned aspects of technical SEO will allow enhancing the performance of your website, increasing the level of its usability, and improving its position in search engine rankings.

Technical SEO is critically important in 2025 because it ensures that search engines can efficiently crawl, index, and understand your website, directly impacting search rankings and visibility. As Google’s algorithms evolve, factors such as page speed, mobile responsiveness, secure HTTPS implementation, and structured data have become essential ranking signals. A technically optimized website not only improves user experience by providing fast load times and stable, mobile-friendly layouts but also prevents issues like broken links, duplicate content, and crawl errors that could hinder indexing. Furthermore, proper technical SEO practices help sites earn rich results in search listings, increasing click-through rates and organic traffic. In an increasingly competitive online landscape, mastering technical SEO is indispensable for achieving and maintaining high search performance.

Core Web Vitals are a set of performance metrics introduced by Google that measure essential aspects of user experience related to page loading, interactivity, and visual stability. These metrics have become critical ranking factors in 2025, as they reflect real-world user experience and directly influence how search engines assess the quality of a website.

Largest Contentful Paint (LCP):

LCP measures the time it takes for the largest visible content element on a page to load and become fully visible to the user. A fast LCP (ideally under 2.5 seconds) ensures that visitors perceive the page as loading quickly, which reduces bounce rates and improves engagement. Common causes of slow LCP include unoptimized images, render-blocking CSS, and slow server response times.

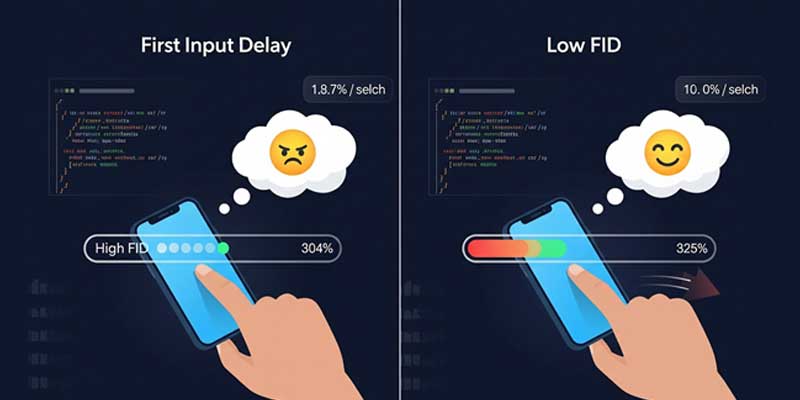

First Input Delay (FID):

FID measures the time from when a user first interacts with a page (like clicking a button or link) to when the browser responds to that interaction. A good FID score (less than 100 milliseconds) is essential to prevent frustration and ensure smooth interactivity. Heavy JavaScript execution and poor event handling are typical culprits that increase FID.

Cumulative Layout Shift (CLS):

CLS quantifies unexpected layout shifts during page load, which can frustrate users when content moves around as they try to interact with it. A CLS score below 0.1 is considered good. Poor CLS scores often result from images without dimensions, ads that load asynchronously, or dynamically injected content.

To measure Core Web Vitals, tools such as Google PageSpeed Insights, Google Search Console, and Lighthouse offer comprehensive reports showing LCP, FID, and CLS scores, along with actionable recommendations.

Improvement strategies include:

Adopting these practices helps create a faster, more stable, and interactive website, which not only improves user satisfaction but is now indispensable for SEO performance.

Mobile-First Indexing is a pivotal shift in how search engines crawl and rank web pages, where Google predominantly uses the mobile version of a website for indexing and ranking. This approach reflects the fact that over 60% of global web traffic now originates from mobile devices, making mobile optimization not optional but essential in 2025.

A mobile-responsive website adapts its layout, content, and functionality to provide an optimal viewing experience across different screen sizes, from smartphones to tablets. Poor mobile responsiveness results in elements that are too small to read, buttons that are hard to tap, or layouts that break entirely, which leads to higher bounce rates and diminished rankings. Google’s algorithm favors pages that deliver seamless experiences on mobile devices by rewarding them with higher rankings, while penalizing sites that offer subpar mobile usability.

Several tools are available to analyze and improve mobile usability:

By regularly auditing mobile usability and implementing responsive design principles (fluid grids, flexible images, and CSS media queries), webmasters ensure that every user, regardless of device, enjoys a fast, accessible, and readable experience. As a result, sites not only rank higher but also engage visitors longer and reduce churn.

Indeed, one of the most significant essentialities of technical SEO is the issue of page speed. Reduced speed of a website is a strictly condemned event in the eyes of search engines, especially Google search engines. A site that has faster loading than such web pages improves the level of users’ satisfaction and ranks higher in the result lists. If you are keen on enhancing your site’s loading time, then it is advisable to; compress images and use the right formats, minimize both CSS, JavaScript, and HTML files, use caching, host static content through CDN, and use lazy loading for images and videos. Note that, while page speed is defined as the time taken to fully load its content, it is also how quickly users of the Internet can engage or participate with your website.

HTTPS is not only a good testimonial feature for customers, meanwhile, it is also used as a signal for search engine ranking. To move to HTTPS, acquire an SSL certificate from a reliable vendor, ensure all pages on the whole site, including the subdomains, utilize HTTPS, update internal links, and resources to HTTPS, and lastly set up the 301 permanent redirection for all the HTTP pages to their HTTPS equivalents.

Schema is a sort of registered information that assists search engines in comprehending the content and relevance of the individual Website pages. The best practices of schema markups help to add rich snippets to the results and get more clicks and visibility on search engines. Major schema markups consist of organization schema, local business schema, product schema, review schema, and article schema. Whenever possible, it is recommended to utilize schema markups since this can assist Google in comprehending the content and possibly lead to a better search engine ranking page for particular queries.

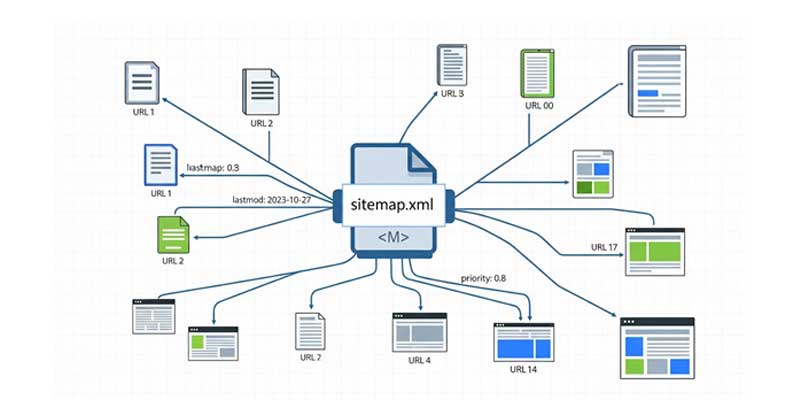

An XML sitemap is a map of all the vital web pages on your website and it helps the search engines crawl and index pages. It is necessary to generate and submit an XML sitemap to Search Engines to avoid certain pages of a particular site not being discovered and indexed on the website. If you are working with a large complicated site, use an XML sitemap type. Recommendations for sitemaps are implementing all the key pages on your website, having more than one sitemap and updating it often according to the URLs on the site, and submitting the sitemap to Google Search Console and similar services for other search engines.

The robots.txt file is a simple yet powerful tool used to instruct search engine crawlers on which parts of a website they are allowed or disallowed from accessing. Proper configuration of the robots.txt file is crucial for ensuring that search engines index only the appropriate pages, enhancing crawl efficiency and safeguarding sensitive content from appearing in search results.

A common mistake is overly aggressive blocking, which can prevent search engines from indexing important content. Examples of pitfalls include:

To avoid such mistakes, always review your robots.txt after updates and rely on tools such as Google Search Console to monitor crawl errors and indexation issues.

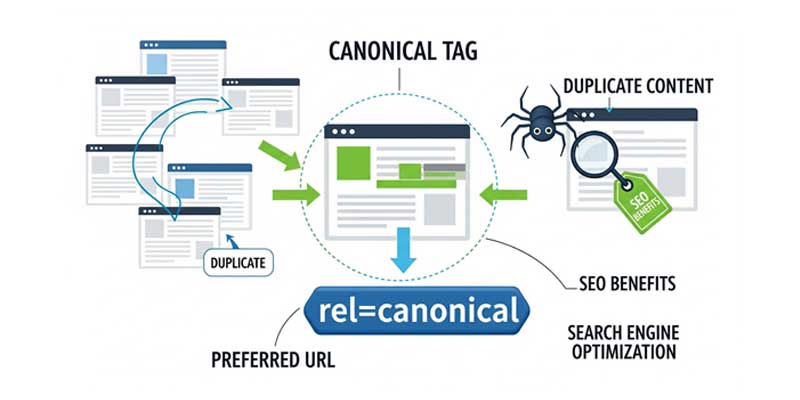

Canonical tags are HTML elements that help webmasters communicate to search engines which version of a page is the “master copy” when multiple URLs have identical or highly similar content. Without canonicalization, search engines may index several versions of the same content, diluting ranking signals and creating duplicate content issues that negatively affect SEO.

<link rel="canonical" href="https://example.com/product/shoes" />

<link rel="canonical" href="https://example.com/article/how-to-optimize-seo" />

<link rel="canonical" href="https://example.com/blog/" />Proper canonicalization consolidates ranking signals to the correct URL, prevents duplicate content penalties, and ensures that search engines serve the most relevant version of your content in search results.

A well-organized URL structure and strategic internal linking play a vital role in helping search engines understand the hierarchy of your website, distributing page authority effectively, and improving overall SEO performance.

A clean URL structure is simple, descriptive, and easy to read for both users and search engines. Best practices include:

Internal linking improves site navigation, distributes link equity, and helps search engines discover new pages. Key strategies include:

By maintaining a clean URL structure combined with strategic internal linking, your website becomes easier to crawl and index, enhances user experience, and strengthens your SEO authority.

Proper management of crawl errors and redirects is essential to maintaining a healthy website that performs well in search engines. Ignoring these technical elements can lead to wasted crawl budget, poor user experience, and loss of link equity.

Crawl errors occur when search engine bots attempt to access pages that are unavailable or misconfigured. These are typically categorized into:

To detect crawl errors:

301 Redirect (Permanent):

Used when a page has permanently moved to a new URL. This passes nearly all ranking signals from the old URL to the new one, helping preserve SEO value.

Example:

Redirect 301 /old-page https://example.com/new-page

302 Redirect (Temporary):

Indicates that a page has been temporarily moved. It does not pass full link equity, so it should only be used when the change is short-term (e.g., for maintenance purposes).

Example:

Redirect 302 /temporary-page https://example.com/maintenance

Best practice is to use 301 redirects for permanent content moves to maintain SEO performance and prevent indexation of outdated URLs. Additionally, avoid redirect chains (multiple redirects leading to the final destination), as they slow down crawl efficiency and degrade page load speed.

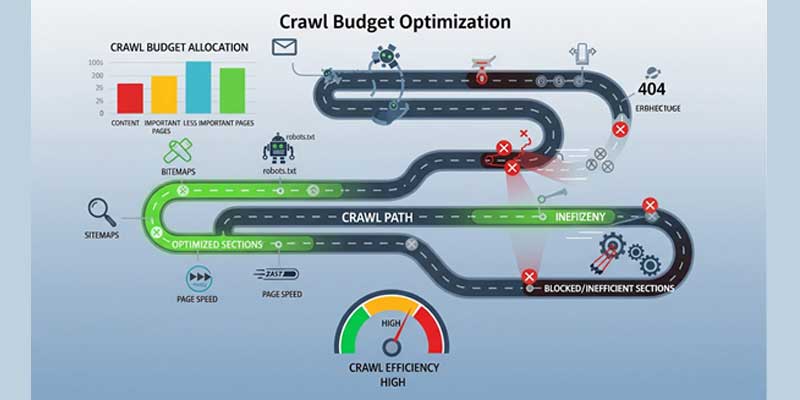

Every search engine has a limited time for crawling or indexing the web which is referred to as a crawl budget. To make the best use of the crawl budget, it is necessary to delete or index such a page, check for broken links or 404 errors, and use robots.txt to inform the crawlers where to go, and speed up your site’s loading time so that it can be crawled at a steady pace.

In 2025, AI-powered SEO tools have become indispensable for maintaining a competitive edge. These tools leverage machine learning algorithms to analyze vast amounts of data, detect patterns, and make actionable recommendations faster than traditional methods. They automate tasks such as keyword research, competitor analysis, content optimization, and backlink profiling. Tools like MarketMuse, SurferSEO, and Clearscope analyze search intent and suggest semantically relevant keywords, helping content creators build topic authority. AI also enables predictive analytics, forecasting which technical issues might impact future rankings, allowing proactive adjustments before problems arise.

Log file analysis offers a granular look at how search engine bots interact with your site, providing crucial insights that no other tool can fully capture. Each time a search bot visits your site, it records information like user-agent, timestamp, HTTP status codes, and the pages accessed. By analyzing these logs, you can:

Using tools such as Splunk, Screaming Frog Log File Analyser, or Elastic Stack (ELK), SEO experts can parse terabytes of log data to reveal hidden issues, such as unexpected blocks from robots.txt or excessive crawling of low-value pages. This approach helps optimize crawl efficiency and ensures high-priority content is indexed promptly.

A Content Delivery Network (CDN) plays a dual role in modern SEO by dramatically improving site speed and providing an extra layer of security. CDNs distribute your site’s static resources (images, scripts, stylesheets) across a global network of servers, reducing latency by serving content from the closest geographic location to the user. Faster load times directly impact Core Web Vitals like LCP and FID, improving ranking and reducing bounce rates.

From a security perspective, CDNs offer DDoS protection, Web Application Firewalls (WAF), and SSL encryption, ensuring both user safety and search engine trust. Leading providers like Cloudflare, Akamai, and Fastly offer advanced caching rules, HTTP/3 support, and automated content purging, all of which maintain optimal site performance under varying traffic loads.

For global businesses, managing international SEO is essential to ensure the right content is shown to the right audience in the correct language or region. The hreflang tag tells search engines about language and regional targeting, preventing duplicate content issues across different country or language versions of a page.

Example implementation:

<link rel="alternate" hreflang="en-us" href="https://example.com/us/page" /> <link rel="alternate" hreflang="en-gb" href="https://example.com/uk/page" /> <link rel="alternate" hreflang="fr-fr" href="https://example.com/fr/page" />

Best practices include:

Failure to implement hreflang properly may result in search engines serving the wrong version, reducing user engagement and visibility in target markets.

Screaming Frog is a powerful tool for a full-site crawl, detecting broken links, duplicate content, missing metadata, oversized images, and more. It simulates how search engines crawl your site, exposing issues that may prevent optimal indexing or ranking.

Google Search Console provides direct insight into how Google perceives your site. Its Coverage report highlights indexing issues, Mobile Usability flags layout problems, and Performance reports show which queries bring traffic. Pay close attention to errors like “submitted URL blocked by robots.txt” or “soft 404”.

After identifying issues, implement fixes such as:

Ensure your site architecture follows a logical, shallow structure where important pages are reachable within 2–3 clicks from the homepage.

Regularly monitor Core Web Vitals using Google PageSpeed Insights and Lighthouse. Optimize for LCP, FID, and CLS by implementing caching strategies, image optimization, code splitting, and deferring non-essential scripts. Set up automated monitoring to track performance over time.

Technical SEO is another SEO factor that is essential when formulating any overall SEO plan. By following the above best practices, it is easier to enhance the search engine optimization of your website, as well as the functionality of your website to users. Therefore, regardless of the fact if you are opting for the services of a local SEO agency in Bangalore, the services of an SEO company in Chennai, or if you are managing the SEO in-house, technical SEO can enable you to obtain better results in the rankings in the search engines.

Remember although you are doing SEO, it will remain a continuous process, so you need to occasionally revisit your structures and make necessary changes. Evaluate the site’s performance with Google Analytics and Google Search Console to discover the problematic aspects of the site. Thus, following the current standards of technical SEO and working on improvements constantly, you can guarantee the effective crawling and indexing of the pages, the provision of quality content and materials for the users, as well as the enhancement of the ranking.

These technical SEO recommendations are not something that can be done quickly, some even demand time and effort from professionals. If you’re lost or too busy to implement these factors on your own, enlist the services of a competent SEO company that can guide you on technical SEO and construct a sound SEO framework for your business venture. Implement the knowledge or hire an experienced specialist, and you’ll be able to achieve the necessary results and increase your business’s online visibility as well as the satisfaction of users and customers.