A/B testing in digital marketing is a method of comparing two versions of a marketing

asset—such as an ad, email, or landing page—to determine which one performs better.

Instead of relying on assumptions, it uses real customer behavior and data to guide

marketing decisions. For businesses investing in campaigns, especially those partnering with a

digital marketing agency in Bangalore, A/B testing provides a systematic approach to optimizing

strategies and ensuring that resources are directed toward the most effective outcomes.

The core idea of A/B testing is simple: divide your audience into two groups and present them with different versions of the same element. One group sees the “control” (version A), while the other interacts with the “variation” (version B). The results, measured through metrics such as click-through rate (CTR), conversion rate, or engagement, indicate which version delivers better performance.

The purpose of A/B testing goes beyond finding a winning variation. It empowers marketers to:

For brands running paid campaigns, especially through a PPC agency in Bangalore, A/B testing ensures that ad creatives, keywords, and calls-to-action are fine-tuned for maximum performance.

While A/B testing focuses on comparing two variations of a single element, multivariate testing evaluates multiple elements simultaneously. For instance, if an A/B test examines two different headlines, a multivariate test might explore different combinations of headlines, images, and button colors all at once.

In practice, businesses often start with A/B testing to build confidence in data-driven decision-making and then scale into multivariate experiments once they have the audience size and resources to support deeper insights.

A/B testing is not just a technical method; it is one of the most reliable ways to ensure marketing strategies are guided by evidence rather than assumptions. In today’s competitive landscape, where customer attention is fragmented, businesses need to know exactly what resonates with their audience. A/B testing provides this clarity by showing which variation of a campaign performs best in real-world conditions.

The true power of A/B testing lies in its ability to generate actionable insights. Instead of relying on intuition or creative preference, marketers can see how users actually behave.

For example, comparing two subject lines in an email campaign reveals which one achieves higher open rates. Similarly, testing different landing page headlines helps determine which message encourages more sign-ups. Over time, these experiments build a valuable knowledge base that guides future strategies.

Data-driven insights help marketing teams:

This approach ensures that every campaign adjustment is backed by reliable evidence, leading to smarter decisions and stronger outcomes.

A/B testing directly impacts the two most important performance metrics: conversions and return on investment. Even small optimizations, such as a change in call-to-action wording or button placement, can significantly increase engagement rates.

For instance, if a variation improves click-through rates by just 5%, that improvement can cascade across the sales funnel, leading to more leads, more sales, and higher revenue. These incremental gains compound over time, producing measurable business growth.

In addition, A/B testing reduces wasted marketing spend. By identifying and eliminating underperforming strategies, businesses can focus their investment on what truly works. This creates a cycle of continuous optimization where campaigns are not only creative but also profitable.

A/B testing is most effective when it follows a structured process. Skipping steps or rushing through the setup can lead to misleading results, wasted resources, or decisions based on incomplete data. Below is a step-by-step approach to running A/B tests that ensures accuracy and meaningful insights.

Every successful A/B test begins with a clear goal. This could be increasing the click-through rate of an ad, improving the conversion rate on a landing page, or boosting email open rates. Once the goal is established, formulate a hypothesis that outlines what change you believe will deliver better results.

For example:

A strong hypothesis is specific, measurable, and directly tied to a business objective. Without this clarity, the test may generate results that are difficult to interpret or apply.

The next step is deciding what element of your marketing asset to test. To maintain accuracy, focus on one variable at a time. This ensures you can clearly attribute performance differences to that specific change.

Common variables include:

By isolating one change per test, you avoid confusion and gain confidence in the results. If multiple factors need testing, plan a sequence of experiments instead of combining them in a single round.

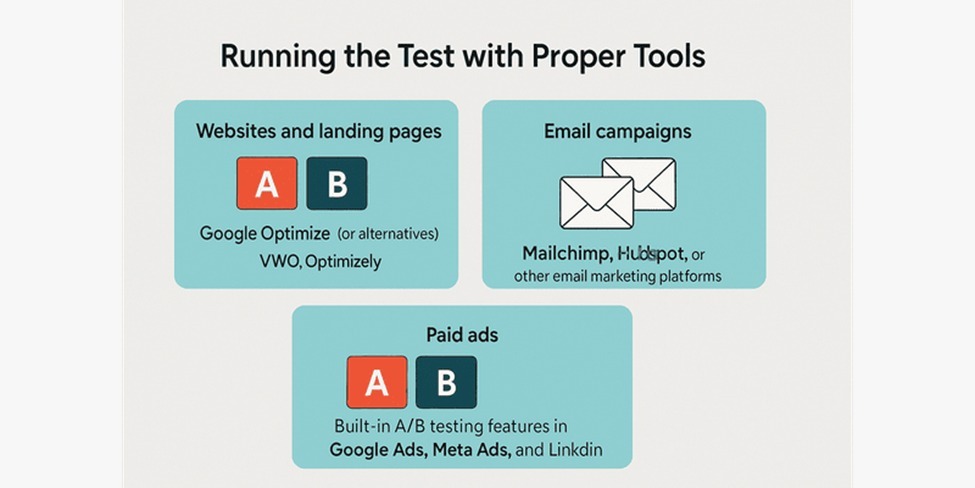

Once variables are chosen, the test must be executed with reliable tools that can split traffic accurately. Modern platforms simplify this process:

When running the test, divide your audience randomly into two groups of adequate size. This prevents bias and ensures that the results represent true user behavior. Also, let the test run for a statistically valid duration—ending it too early can lead to false conclusions.

After the test period ends, review the data carefully. Look for statistically significant differences between the control (A) and the variation (B). Key performance indicators (KPIs) such as click-through rate, conversion rate, or revenue per visitor should guide the evaluation.

Steps for effective analysis:

If the variation outperforms the control, it can be rolled out as the new default. If not, the test still provides valuable learnings about what does not work, which can inform future iterations.

A/B testing is versatile and can be applied across different channels of digital marketing. By testing in the right places, businesses can improve user experience, boost engagement, and achieve better overall performance.

Websites and landing pages are among the most common areas where A/B testing delivers quick wins. Small changes in page design, headlines, forms, or calls-to-action can have a significant impact on user behavior. For example, testing a shorter lead form versus a longer one can show which results in more sign-ups.

These experiments are an integral part of conversion rate optimization, as they help brands systematically identify which elements drive more conversions. A/B testing ensures that every design or copy adjustment is based on actual user data rather than assumptions.

In digital advertising, A/B testing helps refine creative assets and targeting. Marketers can test different ad headlines, images, or audience segments to identify which combination drives the highest click-through or conversion rate.

For social campaigns, testing variations of visuals, captions, or even hashtag usage can reveal what resonates most with the target audience. This approach ensures ad spend is optimized and campaigns generate stronger results.

Email campaigns are another area where A/B testing is highly effective. Subject lines, sender names, content layouts, and call-to-action buttons can all be tested to measure their influence on open rates and click-throughs.

For example, testing whether a personalized subject line outperforms a generic one can provide actionable insights that improve future campaigns. Over time, consistent testing leads to more engaging emails and better audience relationships.

Choosing the right tools is critical for executing reliable A/B tests. Effective platforms not only divide traffic between variations but also track performance, measure statistical significance, and provide insights to guide decision-making.

Google Optimize was once a popular choice for running A/B tests on websites, but with its discontinuation, marketers now rely on alternatives that integrate with Google Analytics 4 and other platforms. These tools allow seamless tracking of user behavior while providing robust testing capabilities.

Some widely used options include:

These alternatives help businesses continue experimentation without losing the data-driven insights once powered by Google Optimize.

Beyond website-specific tools, there are dedicated platforms designed to support A/B testing across digital channels:

Each platform offers unique strengths, but the best choice depends on the scale of campaigns, technical requirements, and the level of personalization a brand aims to achieve.

Running an A/B test is straightforward in theory but requires discipline in execution. Many businesses fail to achieve meaningful results because they overlook essential testing principles or draw conclusions too early. Following best practices while avoiding common mistakes ensures that tests lead to actionable insights instead of misleading data.

One of the most common errors in A/B testing is trying to evaluate too many changes at once. If multiple elements are altered simultaneously—such as a headline, button color, and image—it becomes impossible to identify which change influenced the outcome.

The best practice is to focus on one variable at a time. For example, if the goal is to improve a landing page conversion rate, start by testing only the headline. Once the winner is clear, move on to testing another element like the call-to-action or image placement. This step-by-step approach produces reliable insights and builds a foundation for long-term optimization.

Another frequent mistake is relying on results that look promising but are not statistically significant. Statistical significance ensures that the observed difference between two versions is not due to chance. Without it, businesses risk making decisions based on random variations in user behavior.

To avoid this, marketers should:

By respecting statistical significance, marketers can trust their results and make confident, data-backed changes.

Ending a test too early is a pitfall that undermines accuracy. Early results may appear to favor one variation, but user behavior can fluctuate over time. Factors such as traffic sources, time of day, or day of the week can temporarily skew outcomes.

The best practice is to let the test run for its full duration and predefined sample size. Even if one version seems to “win” early, conclusions should only be drawn once enough data has been collected. This discipline prevents misinterpretation and ensures that decisions are grounded in reliable evidence.

While A/B testing is a powerful tool for improving digital marketing performance, it is not without its constraints. Marketers must be aware of the limitations that can affect the reliability of results. Recognizing these challenges helps in setting realistic expectations and designing better experiments.

One of the biggest hurdles in A/B testing is dealing with insufficient sample sizes. For a test to be statistically valid, it needs a large enough audience to reflect meaningful patterns. Running a test on too few visitors or impressions can produce misleading results that do not hold up when applied on a larger scale.

Common problems caused by small sample sizes include:

The solution is to calculate the required sample size before starting the test and allow the experiment to run long enough to collect sufficient data. This ensures confidence in the results and prevents wasted effort.

Even with a solid design, external factors can affect the accuracy of A/B test results. User behavior does not occur in isolation—it can be influenced by events or conditions outside of the marketer’s control.

Examples include:

These factors can distort the performance of both control and variation groups, making it harder to isolate the true impact of the tested change. To mitigate this, marketers should account for timing, run tests long enough to smooth out fluctuations, and revalidate results when necessary.

As digital marketing evolves, so does the way A/B testing is applied. Traditional split testing remains valuable, but advancements in artificial intelligence and personalization are reshaping experimentation. The future of A/B testing lies in more adaptive, intelligent, and user-focused approaches that go beyond static comparisons.

Artificial intelligence is transforming how marketers conduct experiments. Instead of waiting weeks to gather enough data, AI-driven testing can analyze user behavior in real time and adjust variables dynamically. Machine learning algorithms identify patterns quickly, reducing the time needed to reach actionable conclusions.

For example, AI-powered platforms can test multiple elements simultaneously, then automatically push the winning variation to the majority of users. This continuous optimization ensures that campaigns are always performing at their best without requiring manual intervention.

The benefits of AI-driven testing include:

This shift allows marketers to move from reactive decision-making to proactive, predictive strategies.

Another major trend shaping the future of A/B testing is personalization. Traditional testing delivers insights at the group level, but not all users respond the same way to a single variation. Personalization addresses this by tailoring content, offers, and experiences to individual user segments—or even to each user in real time.

With advanced data collection and AI, businesses can serve different variations to different audience groups based on demographics, interests, or browsing behavior. For example, one version of a landing page might resonate with first-time visitors, while another works better for returning customers.

This approach creates:

As personalization becomes standard, the role of A/B testing will expand from identifying a single winning version to orchestrating dynamic, user-specific experiences across all touchpoints.

A/B testing in digital marketing is equally effective and useful for any digital marketing or PPC agency in Bangalore or any other place. When each facet of your marketing strategy is flush with specifics, you are in a better position to improve your online marketing efforts and outcompete your rivals.

It is very important to note that A/B testing in digital marketing is a continuous process. Marketing departments should always have tests on the go, reviewing outcomes, and effecting changes. Each time, you are more likely to learn about the audience that you would like to market to and their behaviors, hence helping you get better results.

From just a single web page up to the entire marketing funnel, embracing A/B testing offers the core empirical information necessary for making reliable decisions in digital marketing. Consequently, start testing today and bring the best out of your marketing campaigns!